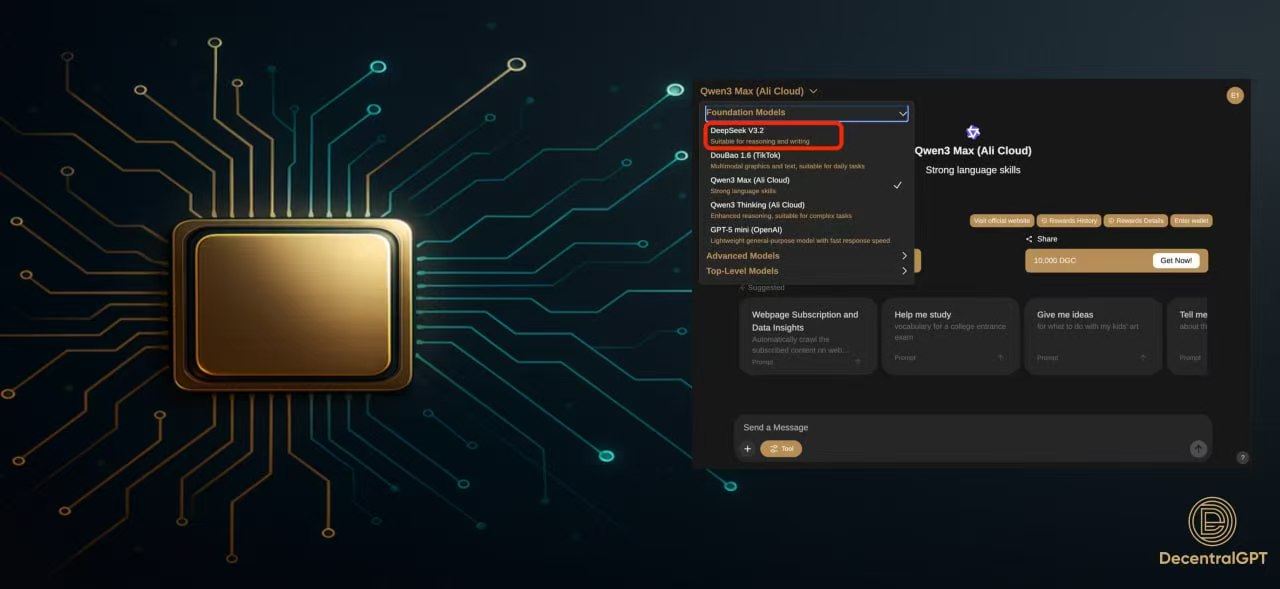

DeepSeek-V3.2-Exp Lands with Sparse Attention—Now Live on DecentralGPT’s Decentralized GPU Network

Abstract GPU node network graphic for DeepSeek-V3.2 Exp on DecentralGPT

What just happened

DeepSeek released V3.2-Exp, an experimental model that adds DeepSeek Sparse Attention (DSA)—a fine-grained sparse attention mechanism designed to boost long-context training and inference efficiency while keeping output quality roughly on par with V3.1-Terminus. DeepSeek also highlighted lower compute costs and broad benchmark parity. Hugging Face+3Hugging Face+3GitHub+3

Independent coverage echoes the focus on cheaper long-context and competitive performance; some outlets note API price cuts accompanying the launch. Analytics India Magazine+3Reuters+3TechCrunch+3

WWhat this means on DecentralGPT

We’ve added DeepSeek-V3.2-Exp to DecentralGPT’s model lineup—available both in DeGPT (consumer chat) and via the API. You can route prompts to nearby GPU nodes (USA / Singapore / Korea) and tap the model’s long-context efficiency without changing your app architecture.

Why pair V3.2-Exp with a decentralized GPU network?

• Lower perceived latency: Regional routing brings inference closer to users, so V3.2-Exp’s efficiency gains translate into snappier responses.

• Predictable costs at scale: DSA reduces compute per token; distributed capacity helps smooth local price spikes. Reuters

• Vendor-agnostic resilience: Run workloads across heterogeneous providers and regions—no single-vendor choke points.

• Built for ops: Use the same endpoints you already call; swap or fall back to other models as needed.

A quick primer on DSA (for builders)

DSA is DeepSeek’s fine-grained sparse attention that prunes computation while preserving utility in long windows. In the V3.2-Exp release, DeepSeek reports benchmark parity with V3.1-Terminus and improved efficiency for extended sequences—exactly where costs typically spike. Hugging Face+1

How to try it today

• DeGPT (B2C): Open DeGPT, select DeepSeek-V3.2-Exp, start chatting.

• API (B2B): Set the model to deepseek-v3.2-exp, choose a region (USA/SG/KR), and stream outputs. Keep other models in your routing/fallback chain as usual.

A word on privacy

We treat voice inputs like text inputs—used to generate your reply and handled under the same account settings and policies. Check the latest details on our website before you update.

The takeawayt

V3.2-Exp pushes long-context efficiency forward; DecentralGPT turns those gains into real-world speed and cost stability with regional, decentralized routing. If you’ve been waiting to scale long prompts without blowing the budget, this is a practical step.

Try V3.2-Exp in DeGPT: https://www.degpt.ai/

Get an API key and pick your region: https://www.decentralgpt.org/