Traditional LLM Platforms vs DecentralGPT: 5 Key Differences That Matter for Real Usage

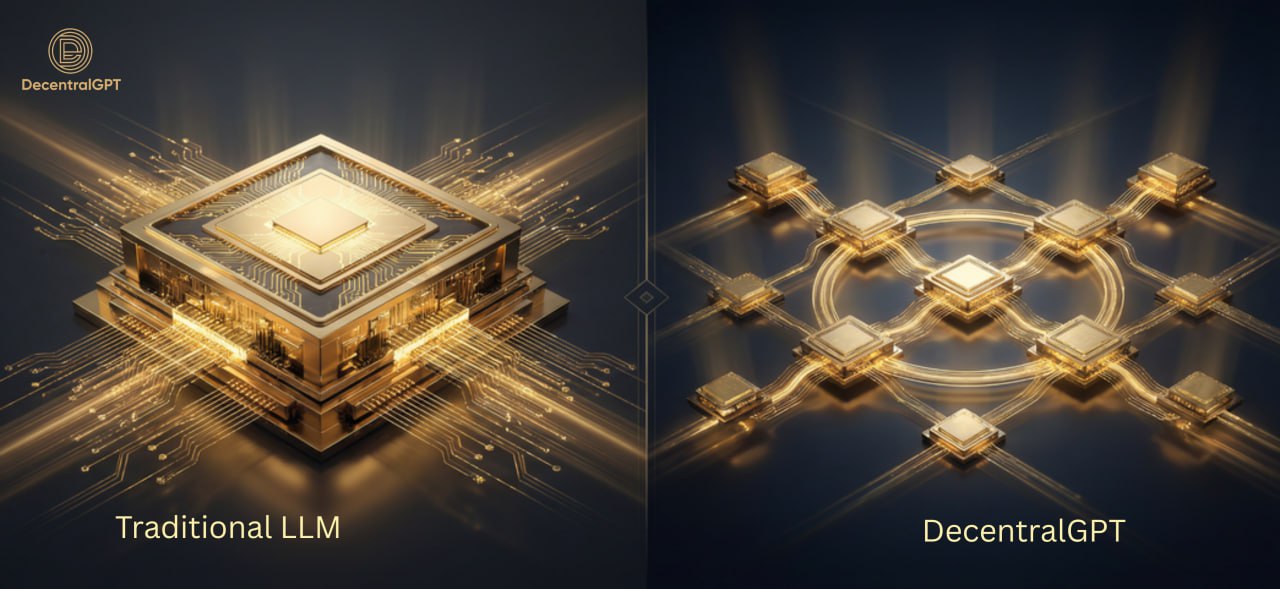

Comparison illustration showing centralized vs decentralized AI infrastructure

The Centralized vs Decentralized AI Choice

Most "traditional LLM platforms" work the same way: one company runs the servers, controls the API, sets the pricing, and decides what models you can use.

DecentralGPT takes a different approach. It is a decentralized and distributed AI inference computing network, designed to support multiple large language models and make advanced AI more accessible.

If you're choosing between DecentralGPT and a traditional LLM product, here are the differences that matter in real life.

1) Compute Costs: Centralized Clouds vs Idle GPUs Worldwide

Traditional LLM platforms rely on centralized GPU infrastructure. That often means higher fixed costs and less flexibility.

DecentralGPT's network model allows idle GPUs globally to join the DecentralGPT Network and provide computation, which can reduce overall inference costs by using underutilized resources.

For users, the takeaway is simple: a decentralized inference network creates a path toward more affordable AI at scale.

2) Model Choice: One Model vs Multi-Model Freedom

Traditional platforms usually keep you inside one ecosystem. If you want a different model style (writing vs coding vs reasoning), you often need another subscription.

DecentralGPT supports a variety of large language models and is built around multi-model usage.

This matters because no single model is best for everything. Real users switch depending on the task:

- Writing and content clarity

- Coding and debugging

- Reasoning and long-form analysis

- Web3 research and documentation

With DecentralGPT, the goal is to make those switches easy inside one product experience (DeGPT): https://www.degpt.ai/

3) Memory Continuity: Switching Models Without "Starting Over"

One pain point on many platforms: when you switch tools or models, you lose context.

DecentralGPT highlights an advantage of memory sharing between different models, meaning users can switch models (for example, between ChatGPT-style and DeepSeek-style options) while retaining prior conversation memory.

That's a practical productivity win: less repetition, fewer restarts, smoother workflows.

4) Openness for Builders: Centralized APIs vs Decentralized APIs

If you're building a product on top of a traditional LLM provider, you depend on that provider's rules and access. In some regions or for some entities, APIs can be restricted.

DecentralGPT states it provides decentralized API interfaces that "cannot be blocked against a specific entity," enabling broader access for developers and organizations to build applications on top of the network.

For Web3 builders, this is a major difference: it supports the idea of AI as open infrastructure, not a closed gate.

5) Values and Direction: Closed SaaS vs Decentralized AI Infrastructure

DecentralGPT's positioning is clear: it aims to build AI that is safe, privacy-protective, democratic, transparent, open-source, and universally accessible.

Traditional LLM platforms can be great products, but they are still centralized by design. DecentralGPT is built to be infrastructure-like: more open, more distributed, and aligned with Web3 principles.

Quick Summary: Who Should Use Which?

If you want a simple rule:

Traditional LLM platforms are fine if you only need one vendor's experience and don't mind lock-in.

DecentralGPT is a better fit if you want:

- Multi-model flexibility in one place

- A decentralized inference direction for cost and resilience

- Memory continuity across model switches

- Decentralized APIs built for broader access

Call to Action

Try the multi-model DeGPT product here: https://www.degpt.ai/

Learn more about DecentralGPT's decentralized inference network and ecosystem: https://www.decentralgpt.org/